## 10分钟搭建伪分布式hadoop

---

> 环境:CentOS Linux release 7.6.1810 (Core)

1. **软件准备**

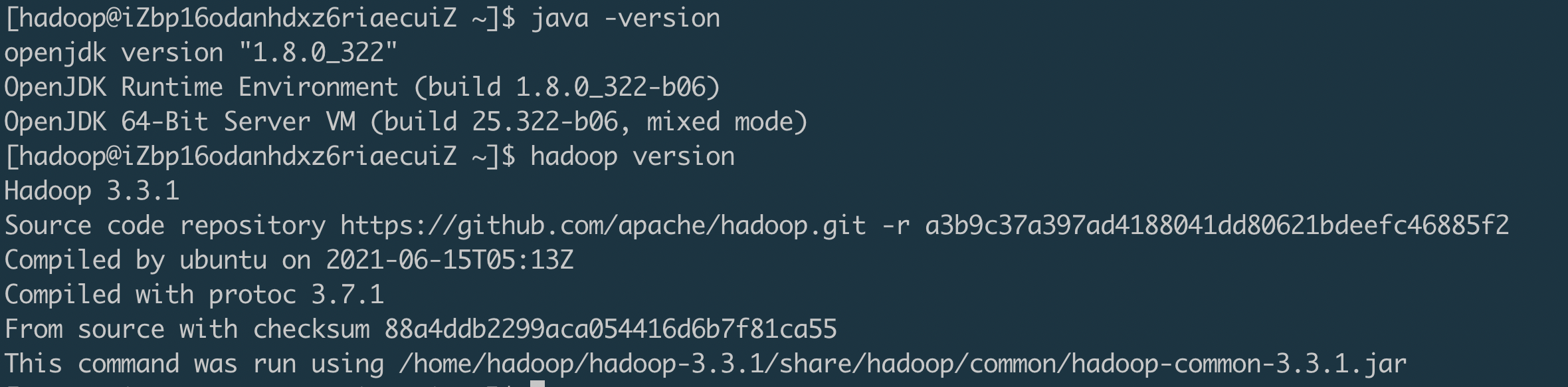

**JAVA**:自行选择所需版本并安装,此处版本为1.8

```shell

yum search java | grep jdk

yum install java-1.8.0-openjdk java-1.8.0-openjdk-devel

```

**Hadoop**:选择下载最新的hadoop二进制文件并解压缩,此处版本为3.3.1

```shell

cd ~

wget --no-check-certificate https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/stable/hadoop-3.3.1.tar.gz

tar -zxvf hadoop-3.3.1.tar.gz

```

> 使用国内镜像地址加速下载`https://mirrors.tuna.tsinghua.edu.cn`

**配置环境变量**:编辑`~/.bashrc`文件,添加如下内容

```shell

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.322.b06-1.el7_9.x86_64

export JRE_HOME=$JAVA_HOME/jre

export HADOOP_HOME=/home/hadoop/hadoop-3.3.1

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

```

> JAVA_HOME与HADOOP_HOME请更换为自己环境下的

>

> 不知道JAVA_HOME的可以使用`whereis javac` 命令查看javac命令地址,之后通过`ll`查看javac文件的链接目标得到

添加完成后执行

```shell

source ~/.bashrc

java -version

hadoop version

```

均能输出正确的java版本与hadoop版本

2. **配置本机免密登录**

执行:

```shell

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

```

> 执行完成后使用`ssh localhost`检查是否完成免密

3. **修改配置文件**

伪分布式将所有服务部署在一台机器上,在启动之前,需要对一些配置文件进行修改

**core-site.xml**

修改 `$HADOOP_HOME/etc/hadoop/core-site.xml`如下:

```xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/data/hadoop</value>

</property>

</configuration>

```

**yarn-site.xml**

修改 `$HADOOP_HOME/etc/hadoop/yarn-site.xml`如下:

```xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

```

**hdfs-site.xml**

修改 `$HADOOP_HOME/etc/hadoop/hdfs-site.xml`如下:

```xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/data/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/data/dfs/data</value>

</property>

</configuration>

```

**mapred-site.xml**

修改 `$HADOOP_HOME/etc/hadoop/mapred-site.xml`如下:

```xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>

```

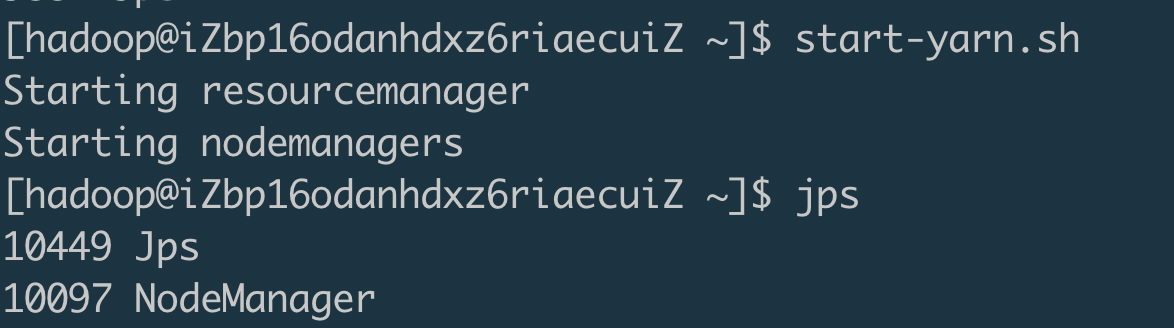

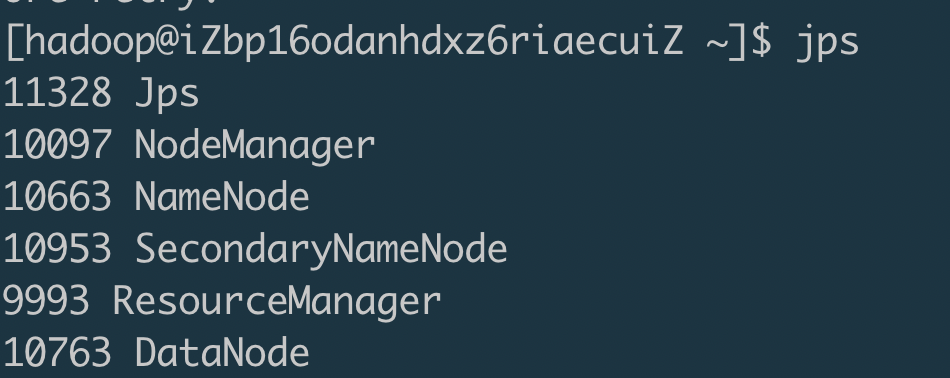

4. **启动**

**启动yarn**

```shell

start-yarn.sh

```

**格式化hdfs**

```shell

hdfs namenode -format

```

**启动hdfs**

```shell

start-hdfs.sh

```

完成!

10分钟搭建伪分布式hadoop